Whisper is the AI that Can Subtitle Anything

Today, OpenAI has published a new artificial intelligence model that can transcribe speech with 50% fewer errors than previous models. The new model, called Whisper, is robust to accents, background noise, and technical language. It also enables transcription in 99 different languages, as well as translation from those languages into English. The system has full support for punctuation and was trained on 680,000 hours of multilingual data collected from the Internet. – Aron Brand

OpenAI is everyone’s favorite open-source AI lab. Known for DALL-E and GPT-3 (their image generation and text generation algorithms), Whisper is taking speech recognition algorithms to the next level.

Use Cases for Whisper

Whisper has major implications for anything that leans on speech-to-text transcription and translation.

Closed Captioning

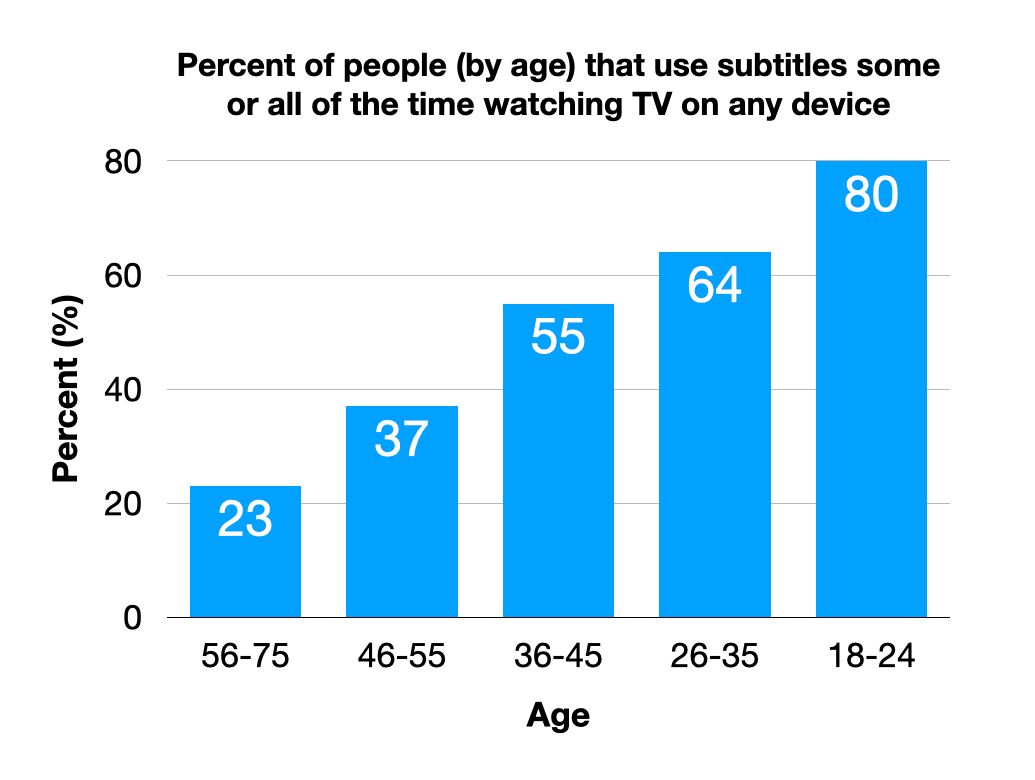

It’s one of the biggest points of contention in the modern world, “Do we watch Netflix with subtitles or not?” However, data shows that the younger you are the more likely you are to use subtitles/captions on all content:

Every single video delivery network must design its platforms with auto-transcription at the front and center. Whisper will go a long way in ensuring that this can happen efficiently, effectively, and at scale.

Professional Transcription

In healthcare, for example, doctors could use AI to transcribe patient notes, which would free up time for them to see more patients. In customer service, AI could be used to accurately transcribe customer calls, which would help businesses to resolve issues more quickly. And in law, AI could be used to transcribe court proceedings, which can reduce the cost of litigation. – Aron Brand

Although AI already exists for these use cases, Whisper will be better.

Language Learning & Education

Whisper will change how we learn languages through content. I’m reminded of a chrome extension called Language Reactor which allows users to learn languages while watching Netflix. Basically, the plugin delivers a second set of subtitles on Netflix so that users can pair dialogue from their known language with the new language.

Whisper could take this idea to all video content. And I also think it would be cool if they created a model for replacing, let’s say, 10% of words with a new language. That way, you could contextually build vocabulary in a new language.

Globalized Content

Mr. Beast, the #5 most-subscribed YouTube channel, hires voice actors to overdub his videos into Spanish, Russian, and Portuguese. He speaks about how this nearly doubled his audience and reach globally. For example, Mr. Beast en Espanol was one of the fastest-growing channels in 2021.

Whisper’s ability to translate over 90 languages will have a major impact on the globalization of content. This will break the language barrier of content creation. We’ll see far more instances of Parasite or Squid Games, content made in a foreign language, becoming a global hit. This will also trickle down to YouTubers and other solo content creators.

Speech Impairments

I like that Hugging Face (another open-source AI project) is opening up the conversation about applying Whisper to speech impairments. My mind goes to those who’ve had someone close to them experience a stroke and the aftermath of having to learn to talk. For weeks and months, you can see the frustration on their faces of speaking but not being understood. It’s truly heartbreaking to watch. But if Whisper could identify and transcribe those with speech impairments, it may go a long way in lifting the spirits of those going through speech challenges.

Upgrading Corporate AI

Because OpenAI is open-source, Whisper will improve transcription and translation across the digital world. Realistically, their transcription models will make the closed captions on YouTube more accurate, and the real-time Google Translate app better. Speech recognition in virtual assistants (Alexa, Siri, etc.) will become more precise and thus better at understanding what we’re asking for. And all content networks, from HBO to Netflix, will be able to deliver better translations and reach a worldwide audience.

The Business of Transcription

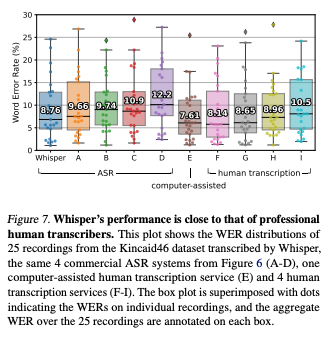

Whisper is going to change everything in the business of transcription and translation. Especially when you compare Whisper’s accuracy to existing ASR (Automatic Speech Recognition) software and human transcription services:

This is a defining moment of “if you can’t beat them, join them”. Deepgram has already integrated this codebase into its transcription software. Dubverse has integrated Whisper into its language translation dubbing software.

Human transcription services like Rev will need to find a way to compete in this new world and provide value where AI cannot. Especially considering the stat above shows Whisper is already closing in on human translation.

Otter, which is one of my favorite speech recognition tools for transcribing meeting notes, is in talks to integrate the Whisper codebase. But this could be a slippery slope if they’re selling a service based on free, open-source software. At that point, anyone with coding skills could create an Otter competitor.

It’s highly possible that Whisper is about to dismantle the business of transcription and translation. And only those that adopt Whisper and provide a niché service (like some of the use cases listed earlier) will survive.

Member discussion