Large Language Models for Beginners

By now, most of us know what a Large Language Model (LLM) can do, but few actually know how it does it. I’ve written dozens of notes on Generative AI in action. However, I recognize where my weaknesses are. I don’t want to be the person that continues talking about the capabilities of LLMs like GPT-3 without knowing how the sauce is made. Especially considering LLMs are poised to automate so much knowledge work.

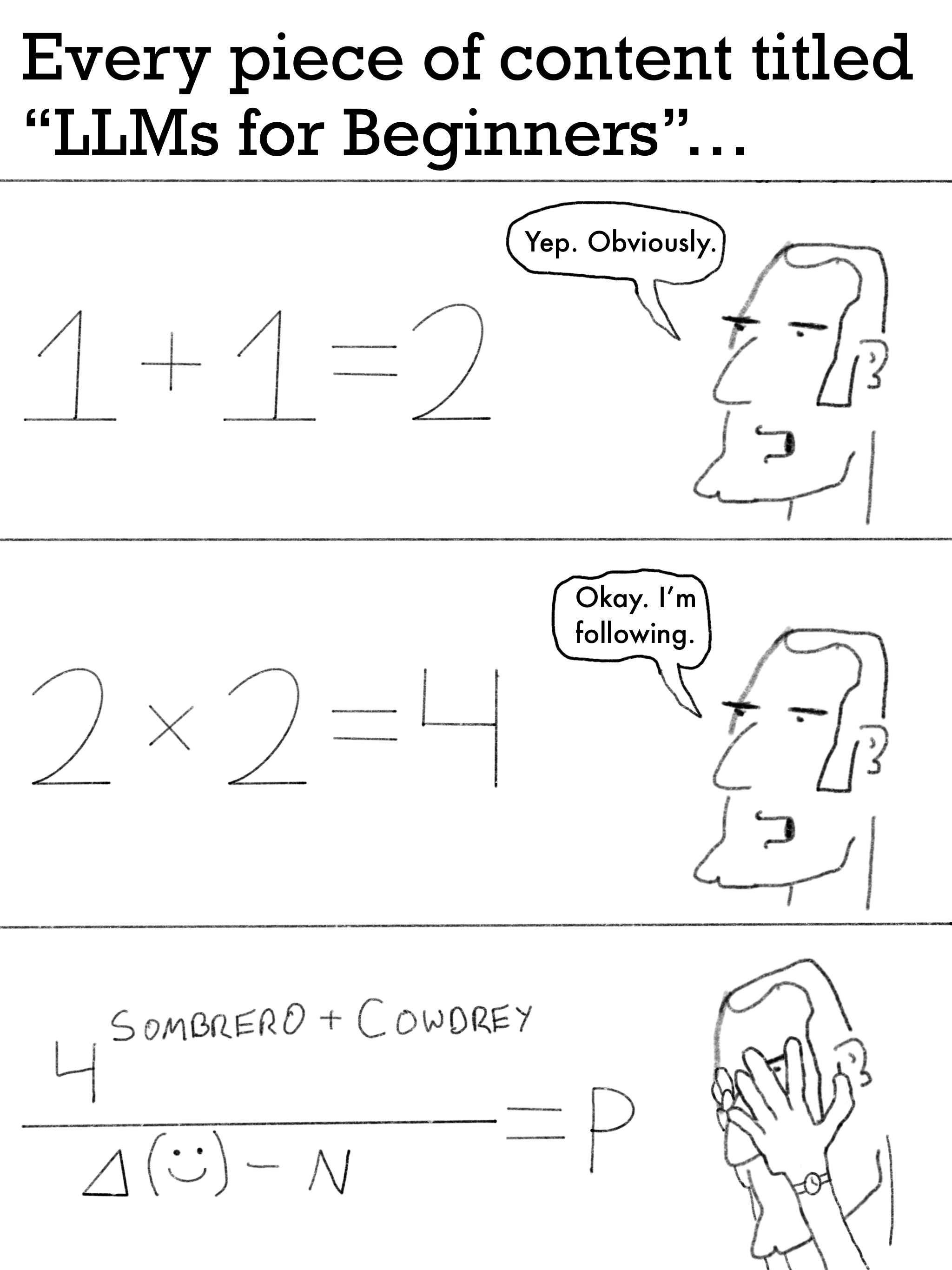

That’s why I made an LLM Study Guide in the past to help guide my learning. What I found, though, is that there’s truly not a great place to start with learning this stuff because there’s always an AI principle or term that pops up that you need to go and research before you can move along. Cowdrey’s comic today perfectly sums up this conundrum:

The challenge of learning LLMs is where to begin. It’s almost better to just be led by your curiosity and see what you learn. With that being said, this is what I learned today about LLMs.

WTF? The First Language Model

A language model, in essence, is a way to predict the probability of words. From there, you can use a language model to generate text, identify speech, translate, recognize characters, etc.

You can break language models into two categories:

- Statistical language models

- Neural language models

The magical Generative AI we have today with ChatGPT (and others) are neural language models. But trying to understand neural language models before statistical language models is like trying to make an omelet before you can crack an egg.

Statistical language models came first. And they’re much simpler to understand. A statistical language model assigns a probability distribution to words in a given text or dataset. By assigning probabilities to each word, it thus creates a model for predicting what is likely to be the next word in a sentence.

The simplest type of statistical language model is a unigram language model. This model analyzes the probabilities of words in an independent state. In other words, it looks only at how often a singular word shows up in a text.

For example, a unigram language model trained on this Everydays note would first map out every unique word and then count the number of times it appears.

29 – the

24 – a

23 – to

23 – language

18 – models

16 – model

14 – of

Etc…

Then, you’d put that word count over the total number of words in this note to get the probabilities.

0.0414 – the

0.0342 – a

0.0329 – to

0.0329 – language

0.0257 – models

0.0229 – model

0.0200 – of

Etc…

These probabilities would then form the basis of the AI’s understanding of the human language.

As you might guess, unigram language models aren’t super effective for something like generating new text because the probabilities lack context. That’s why there are bigram models (two-word pairings), trigram models (three-word groupings), and the general n-gram models (where “n” is any number). By looking at probabilities of word pairings or triplets, you add more context to the model. Generally speaking, the greater the value that “n” is, the more accurately you can model human language. But, it also adds more computing pressures.

So what is (was) a unigram model good for?

Really, it’s just good for Information Retrieval, also known as IR.

IR is not the place where you most immediately need complex language models, since IR does not directly depend on the structure of sentences to the extent that other tasks like speech recognition do. Unigram models are often sufficient to judge the topic of a text. Moreover, as we shall see, IR language models are frequently estimated from a single document and so it is questionable whether there is enough training data to do more.

With limited training data, a more constrained model tends to perform better. In addition, unigram models are more efficient to estimate and apply than higher-order models. – Stanford NLP

Basically, unigram language models were effective for mapping how often a word showed up in a given document. Like a rudimentary form of Internet Search, a unigram model would help identify passages or sentences with the target word you’re looking for.

That’s a unigram language model in a nutshell – the simplest place to start for understanding language models. And it only gets harder from here.

Member discussion